IN THE NEWS

The Edge Episode 28: Robots with Jeff Mahler

Show Notes

There’s a paradox in robotics that says: what’s easy for humans is hard for robots, and vice versa. Complex calculations, for instance, are the domain of machines. Simple motor tasks like picking up an object, on the other hand, can stump a robot. That’s where our guest comes in. Jeff Mahler, P.h.D. ’18. has spent his career working on improving the capabilities of robotic object manipulation. After completing his postdoctoral work at UC Berkeley, Mahler went on to co-found Ambi Robotics with Professor Ken Goldberg, Stephen McKinley, M.S. ’13, PhD. ’16, David Gealy ’15, M.S. ’17, and Matt Matl, M.S. ’19, building AI-powered robots for warehouse operations. He talks to us about the state of robot assistants and how soon—if ever—we might expect a full robot revolution.

Further reading:

- TechCrunch article on the launch of Ambi Stack

- UC Berkeley News article on Berkeley’s latest breakthroughs in robot learning

- WIRED article covering Amazon’s new tactile-sensing warehouse robot, Vulcan

- Mahler et al.’s 2019 Science Robotics paper, which introduces Dex-Net 4.0

- Watch Dex-Net 2.0 picking up objects

This episode was written and hosted by Nathalia Alcantara and produced by Coby McDonald.

Art by Michiko Toki and original music by Mogli Maureal. Additional music from Blue Dot Sessions.

Transcript:

NATHALIA ALCANTARA: Ok, let’s start with a thought experiment: Picture a robot. Really, do it, I’ll wait. I bet it has arms, a head, and, in its own way, looks a little bit like you and me.

But there’s another kind of robot that rarely gets the spotlight.

If you’ve ordered something online recently (and if you didn’t, wow, congratulations) there’s a good chance a robot touched it before you did. Major e-commerce companies have been using robots to sort, pick, carry, and move packages around their warehouses. For example, since 2012 Amazon has introduced robotic automation systems and, today, it has more than seven hundred fifty thousand robots.

Some of them may have arms, but that’s about the extent of their resemblance to humans. Think oversized Roombas lifting and moving packages or three-foot-tall machines that glide across the floor with one long robotic arm and suction cups for fingers. These robots are set to transform industries by slashing costs, speeding up work, and changing warehouse jobs worldwide

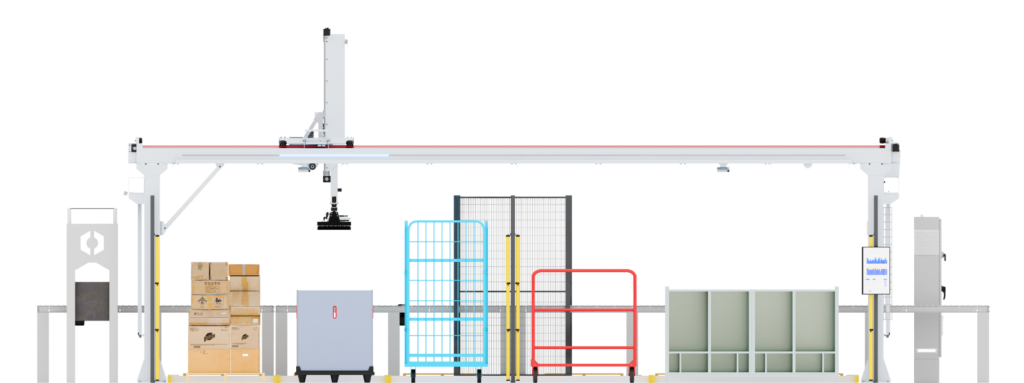

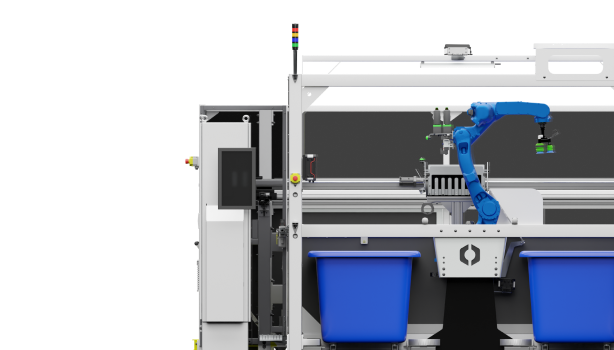

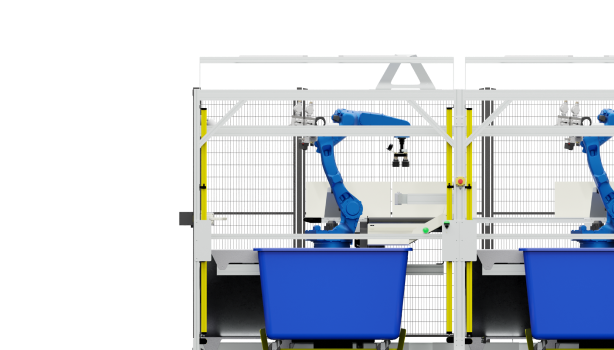

Our guest today has spent his career working on these types of robots. In 2019, he, along with four other Berkeley researchers he met during his PhD at Cal, co-founded Ambi Robotics. In January, they launched AmbiStack, their newest robot—an arm mounted above an infeed conveyor belt that uses AI to play real-life Tetris.

That may sound simple, but as Ambi co-founder and Berkeley professor Ken Goldberg says, paraphrasing Moravec’s paradox: “What’s easy for humans is hard for robots, and what’s hard for humans is easy for robots.”

Even though AI-powered robots can beat us in strength and even skills like math, chess, and Go, they struggle to do things most of us do on autopilot: folding a t-shirt, pouring a cup of coffee, grabbing a book.

So, what can we realistically expect robots to do in the real world? Will we see robot butlers like The Jetsons’ Rosie rolling around anytime soon? Are robots really about to change our lives? Or…what if these warehouse robots already have?

[MUSIC IN]

NAT: This is The Edge, produced by California magazine and the Cal Alumni Association. I’m your host for today, Nathalia Alcantara. Leah’s off digging for new stories, but don’t worry she’ll be back soon with more episodes.

Our guest today is Jeff Mahler, co-founder and CTO of Ambi Robotics, a Berkeley-born company that’s leading the charge in automating warehouses

[MUSIC OUT]

In this episode, Mahler talks about the state of AI-powered robot development, why grasping is such a big challenge, and whether we should expect robots to have a breakthrough “Chat GPT moment” anytime soon.

NAT: Welcome Jeff, thank you for being here.

JEFF MAHLER: And thank you for having me, Nathalia.

NAT: I know that you did some manual labor before school, and you worked as a cashier, I believe, and you talked about how that kind of influenced your interest in robotics. Can you share more about that and what else first sParked your interest in robotics?

JEFF: Yes, I did a number of jobs involving manual labor when I was a teenager. I delivered newsletters, I worked as a cashier in a grocery store, and in those jobs, there was a need to do a manual action many, many times over and over. So going to the cashier job, we would have to grab and scan items very quickly. And in fact, we would be measured on how fast we were scanning items and checking people out in the register. So my mind being more mathematically inclined, I was always trying to think about, how do I optimize this process? How do I stage the items to go as quickly as possible, memorize the numbers of all the produce and so on. And that kind of thinking I would bring to a lot of these types of tasks. And I was also interested in programming on the side, initially to sort of make video games, and I started to put those two things together and think about, how could I take some of these insights of optimizing these manual processes and maybe have an autonomous system, a robot, do these processes instead?

NAT: And what were some of the first steps that you took after kind of having that interest.

JEFF: I initially joined a robotics club at University of Texas at Austin, where I did my undergraduate degree. We were doing things like racing robots, following lines and so on. And later in my undergraduate studies, I got into computer vision because I saw there was a really big opportunity to have machines that interact with the world around them. So I started working in Professor Sriram Vishwanath’s lab at University of Texas on scanning items. This was just when NVIDIA GPUs and the Microsoft Connect were coming out, and so we were leveraging those technologies to be able to scan items by essentially pressing a record button on a device, kind of like a big iPad, and walking that device around a room and capturing all that data and putting it into a 3D model. The next step was to have a machine that would actually move and interact with that 3D model, that visual representation of the world. And so I went on to do my PhD at Professor Ken Goldberg’s lab, where we started looking at surgical robotics initially, and eventually I got really interested in this problem of robotic grasping.

NAT: I know that early on, you didn’t see it as a hard problem, and at some point that changed. So what shifted your perspective and made you realize how truly difficult grasping is?

JEFF: That’s a really great point. It’s one of those things that really makes us human, the fact that we can look at items and pick them up and twirl them around, orient them in various ways, without even really thinking about it. And we often don’t see this as being intelligent. But for a robot, it’s very, very hard. And when I initially looked at this problem, I initially thought, well, this probably isn’t that hard. It’s not very hard for me to do it. But once I tried making a robot actually pick up items, I quickly realized how hard this actually is, because there’s uncertainty in the sensing, for example, there’s noise in cameras like the Microsoft Connect that I mentioned earlier and the depth measurements they get. There’s imprecision in control, meaning, if we ask a robot to move to a certain position, it might not go exactly there. It might be slightly inaccurate. There’s also what we call in the academic world, partial observability, which means that robots can only see one view of the world at a time. There could be items behind other items in front of it, occlusions, and this also carries into things like frictional properties. The surface of a table has various friction depending on which direction you push an item, and can move almost randomly. And so the robot may not be able to directly observe these things. And when you couple all of those things with the vast diversity of different items that we’d like to have a robot interact with. It becomes a grand challenge of robotics to actually enable them to pick up and handle all these different types of items.

NAT: I find it fascinating that it is something incredibly difficult, but we do that every day, all the time.

JEFF: Yes, absolutely. And this is what made me so excited about this problem, was seeing how challenging it was and how much it actually uniquely made us human, to be able to do these sorts of things without thinking. And Ken was a huge influence here. He had been working in robotic grasping for quite a long time, when I joined his lab, and he saw that there is a big opportunity to leverage new advances in AI to revisit some of the problems in robot grasping that had been very hard for a long time.

NAT: I would love to hear more about what was your PhD experience like, and looking back, what were some of the biggest lessons that you took away from your research at Berkeley, and perhaps both technically and in terms of how you see the field evolving?

JEFF: I was fortunate in my PhD to start at a time when AI was going through a revolution, and deep neural networks were just starting to be recognized as the method going forward for problems like image recognition or language modeling, like translation, and soon got into AIS playing video games and things like that. So there was almost a Cambrian explosion of ideas of different ways that you could use AI in different applications and in robotics time was right to start leveraging this AI and getting real results. But the challenge was that we didn’t have the data to fuel this AI, and specifically modern AIS like chat GPT are trained on internet scale data, but in robotics, especially at the start of my PhD, we basically had no data at all. Yes, there are some robots in manufacturing, but that data wasn’t being collected in a way that was useful for AI training, and plus, those robots were doing very repetitive motions. So we sort of quickly realized this, and my PhD started moving into this data problem. How can we collect training data at scale and get AIs to work without having to buy, say, 100 robots, and have them start doing random actions. And we had a really interesting breakthrough where we were able to actually predict very well whether or not a robot could pick up an item based on analytic models of the forces and torques that a robot could apply to the item when it contacts it with its fingers. And essentially, we could turn the problem of grasping items into a simulation or a video game where the robot would try to pick up different items, grasp maybe fail to grasp them a number of times and collect millions of data points essentially overnight, rather than taking years worth of time in the real world.

NAT: When you say simulated, does that mean it was all digital and almost like the robot was dreaming about it, or was the robot trying and kind of learning like a child. Like, when it learns in the simulated world, what exactly does that look like?

JEFF: Yeah, that’s a great question. There’s both elements actually in the simulator. So it is learning in a video game where things can happen much faster. It’s a little bit like when Neo learns kung fu in the matrix, it can get downloaded into his brain very quickly. There’s an element of that sort of speed of learning, and there’s also an element of getting better over time. But what’s slightly different from the the what we call ab initio or blank slate learning, is that in simulation, we can know where all the items are, and we can also leverage things like a teacher, a teacher that’s able to see where everything is, know what everything weighs, and actually tell the robot AI how to grasp this item or that item in a different situation. And so we can also do that sort of teaching a base method or algorithmic supervision to, to speed up the robot in simulation

NAT: Quick sidebar here: When Jeff mentions a “teacher,” he’s talking about training robots in a simulated, or “fake,” world. At Ambi, that means a computer-generated warehouse where the robot can practice tasks like picking up boxes, sorting items, and placing things on shelves. And then, much like bots like ChatGPT are trained with reinforcement learning, if it does something right (like grabbing a box correctly), it gets a reward. If it messes up (like dropping something), it gets a penalty. The robot repeats the task thousands or even millions of times in simulation, getting a little better each time. Once it performs well virtually, it can transfer those skills to the real world — and this is much faster than learning through trial and error in physical space.

NAT: And that’s what started the Dex-Net project, right? I understand you started it in 2017 after finding a test tube handling robot in a dumpster in Berkeley. Can you ever talk about that and the original Dex-Net design?

JEFF: Absolutely, that’s a great part of this story. We needed data, and at the early part of my PhD, we didn’t have it. I was talking with two of my co-founders at Ambi robotics, Stephen McKinley and David Gealy, who are on the mechanical engineering side about our need to set up a robot as a data collection rig. And they, being very resourceful, knew that there was a lot of extra equipment in the UC Berkeley basements, and found these test two handling robots called the Zymark Zymates, and brought them back to life by reviving them with new control systems. So we set up this test bench where the robot would try to pick up 3D printed items over and over, and it was important that we had the capability to 3D print here too, because we could have the same item in both the real world and in simulation, because we 3d printed from that virtual representation in the simulated world, so we could try many, many different grasps on these items and compare them very closely with what a simulator predicted. And that’s where the Dex-Net project came in. Once we realized we had those relevant simulation models, we gathered a huge data set of 3D CAD models from various places on the internet like Thingiverse, which was being made for 3D printing, and created this database of different items that could then be randomly dropped in bins or put on shelves, and we could have the robot play that video game of attempting to grasp them getting a higher score when it succeeded, and training the AI to work really well. And this culminated in us having AI models that were surprisingly able to pick up a great diversity of different items in the real, real world, even in highly cluttered scenarios like dropping them all into a bin and in the sort of final project of Dex-Net, Dex-Net 4.0 we were able to have an AI trained completely in simulation that could pick up very challenging items randomly cluttered in a bin and choose whether to grab with a two finger gripper or a suction cup at the same time. And this was really exciting. It led to us being covered in New York Times and MIT Tech review, and we even had a chance to present our research to Jeff Bezos himself at his Mars 2018 conference in Palm Springs, which was a really exciting moment, because we got to see his reaction, and it reinforced for us that this was really a breakthrough technology and able to give robots new capabilities that maybe even some of the biggest companies were not on TV yet.

NAT: What was his reaction?

JEFF: He loved it. And we had been pretty nervous. We weren’t sure if he was going to come at all, because there’s no guarantee. But he stayed there for a while. He invited a bunch of people over, and then I started getting nervous, because they were all trying to trick the robot with different items. We had brought our own set of items, but their associate was taking off their shoe and putting it into the bin. Somebody else took a necklace off and put it into the bin. There were things like cigars and avocados, very random items going in there, but the robot was able to pick them all up. And it was a big success.

NAT: All of them?

JEFF: Almost all of them. There were some that are hard, but it did get them eventually. The necklace was a very hard one. It had to try a few times.

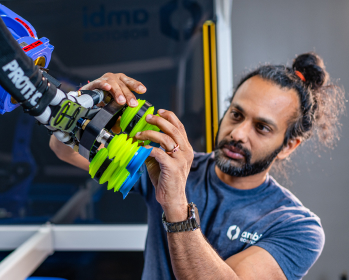

NAT: Tell me a little bit about the company and why. Why did you guys choose to focus, I believe, correct me if I’m wrong on suction based systems, rather than just more like a human, like hands that I think most people would associate with grasping?

JEFF: So we started with suction cups for a few different reasons, the big ones being that we need to have very high reliability and something that can go grasp items in a lot of different difficult scenarios. So a good one is, imagine an item in the corner of a bin. The robot needs to go in there and fit its hand all the way down in there to grab something out and with suction cups, if you’re able to sort of reach the surface of an item and turn on suction, you can grab it versus having to wrap hands around the item. We saw a big opportunity around the time we did this demonstration to Jeff Bezos to take our technology and make an impact in the real world. And this is something that we had been really interested in for a long time. All of my co-founders, Ken Goldberg, Steve McKinley, David Gealy, and Matt Matl, who’s on the software side. We loved making these fun robot demos and videos, but it only really mattered to us if it was actually going to be able to help people. And when we took a look at different market spaces like manufacturing, recycling logistics, we saw a big opportunity to help in logistics, in particular, because consumer demands are changing really rapidly. People want items always faster and cheaper. They’re shopping more online, and this is putting a lot of stress on the companies that deliver those goods. There’s a lot that happens between your online shopping cart and your front door, and a lot of times those items are being handled by people, by human hands many, many times between the shopping cart and your front door. And if you scale this up to what’s going on over the entire world, there’s trillions of times a year that human hands are touching various items in that supply chain. And the challenge is that these are very repetitive jobs that people are doing in a lot of cases, and the repetitive motions of handling these items are often highly injury prone, especially when we get into heavier items and they’re lifted at shoulder height or above, they can lead to repetitive motion injuries very quickly. And we saw a big opportunity to have human collaborative systems, where we knew robots couldn’t do everything, but what they could do is a lot of those repetitive motions, and then we could actually leverage the person who used to be sorting by hand to operate the machines and be upscaled, upskilled into a robot operator role. So we started taking the technology in that dimension or direction, excuse me, and building products that essentially act as productivity multipliers for workers in the supply chain.

And so just coming back quickly to the suction cup point, this is where the reliability is really critical. The users of these systems, they want the robot to be working 24 hours a day at all times and not break down, and that’s when those workers have to go back to sorting by hand. And so that’s why we prize reliability so highly.

NAT: I wonder, what would you say to somebody who would argue that perhaps some of these companies don’t treat their workers in the best possible way, and that’s why these jobs are so prone to injury. I’m just wondering, do you think it is inevitable that these are injury-prone jobs?

JEFF: I think there is some inevitability to it, and here’s why. Consumers want faster shipping. They want lower costs at all times, and it becomes a race to the bottom where the companies who are able to deliver on that have to have people sorting these items at extremely high rates. And the challenge is if one of those companies is able to do something faster and better by having people do injury prone motions. Unfortunately, they’re kind of winning in this market space, because, again, they’re giving the consumer what they want. And that’s a challenge, right? Because this is a really complex system where people need goods. You know? We’re delivering things like medicine as well, and people want to be able to get those things quickly, but in order to do that, historically, there’s had to be people handling a lot of those goods. And so we see the future as being robots that are handling these items and multiplying productivity the same way that you wouldn’t hire a delivery driver without a delivery vehicle. Why have a package handler, not have a robot?

NAT: Do you think we’re getting anywhere close, like right now, to the point where robotic labor is just more cost effective than—or cost effective enough to replace humans at a scale or not?

JEFF: So it’s not about replacing humans, but about having the robot plus human working together be more cost effective than the process where the human was just doing everything themselves. And for that, we’re getting there. We are seeing great ROI for a customer in things like parcel sorting. And today, I think the best return on investments are where there’s a high amount of volume, so the robot could be doing the same motion for 10 or more hours a day. And I think the costs are continuing to go down for things like the motors and other components going into the robots, the computers and GPUs and so on, and so that amount of time you need to operate per day to get the benefit of that robot working with the person, it’s only going down from here.

NAT: So from what I’m hearing, the idea is not a fully autonomous warehouse. It’s more a collaboration between robots and humans?

JEFF: Yes, that’s right. Humans are really important for handling what we call exceptions, so things like maybe a damaged package that comes through, or maybe the barcode on the package is damaged and we can’t scan it, we can’t figure out where it should go, and there’s sometimes decision making to be made on the fly of what to do with those items or how to handle overflows, and humans are really important for that. And we want robots to do what they’re best at, which is these sort of repetitive motions where they need to be done over and over again all day in the warehouse. So the Moravec paradox states that it’s comparatively easy to have an AI beat humans at logical tasks like playing chess, compared to having an AI have the grasping and manipulation capabilities of a toddler being able to pick up random items around them that they haven’t seen before. And it is counterintuitive, because, again, we don’t think of those things as being what makes us intelligent. However, it is a really important thing that makes us human.

NAT: Another quick sidebar here. We are about to talk about DeepSeek, which is a Chinese AI model that got a lot of attention in January when it released a chatbot that performed surprisingly well—even competing with models like ChatGPT. What stood out is that it was trained on publicly available data and built without a big tech budget.

NAT: You posted recently that DeepSeek is great news for robotics. Why do you think that?

JEFF: DeepSeek is great news for robotics because ultimately, it’s driving down the cost of getting high performance in AI. Or rather, I should say DeepSeek is an illustration of what’s possible in terms of lower cost. AI robotics is really hard to make that robot plus human process be more economical than just having a person there, as we talked about earlier. And the more capabilities we can get for lower cost, the better we are, and it has a lot of other implications, such as being able to compute things on the edge. That’s really important for our customers, because having the GPUs that run the AI directly connected to the robot increases reliability, throughput, as well as security of our systems, which our customers care greatly about. And finally, just having these open source models allows the community at large to iterate much faster, so it’s helping progress at large in AI robotics.

NAT: And I’m assuming the data is also a huge element in there.

JEFF: Yes. One exciting thing about DeepSeek as well is that it’s based on reinforcement learning, where the robot is learning a little bit more like a baby, as you mentioned earlier, trying different things out, seeing what works and what fails, and doing more of the things that work over time. And that is a big trend in robotics right now, and sort of coming back, which is for the robot to be able to do that, maybe from some base AI model that it’s already learned from lots of data to get better at doing a task over time. And we’ve deployed this in our latest product announcement, ambi stack, where the robot is stacking items densely together. And type of reinforcement learning is really important for the robot figuring out how to take random items it’s never seen before and fit them together densely so that you can get as many items as possible into a container or to fit onto a truck and minimize transportation costs. So we’re very excited about that.

NAT: And speaking of ChatGPT or LLMs and DeepSeek, there seems to be this growing sense that humanoid butlers are just around the corner. And you recently wrote that 2023 was kind of the year of ChatGPT. And then early 2024 there was a sense that something even greater was coming. And then, for example, Brad Adcock of figure AI said that 2024 would be the “year of embodied AI,” and it seemed inevitable that robots would soon kind of unlock this kind of emerging capabilities that we’ve seen in LLMs. So as you pointed out, that didn’t happen. So can you talk about what actually happened in 2024 and what can we realistically expect from the next coming years?

JEFF: 2024 was a very exciting year in AI and robotics, no doubt about it, and we saw this surge of interest in embodied AI and what could be possible with general purpose robots, like humanoids, some of them have legs and walk, but it will take a long time. The good news is that this interest is there, and there’s a lot of investment in this space, which is going to fuel a lot of new development. But I would be cautious about predicting that will happen in the next couple of years, because there is a long life cycle for a complex hardware product like this. We can look at, for example, the world of autonomous driving for a lesson on what has happened there. There was a lot of predictions in the early 2010s about how we would have level five autonomous vehicles by 2016 or something like that. And we just had Waymo announce that they could operate freely in San Francisco with customers signing up on the app in 2024 and those you know, that’s a great accomplishment, but they still require human interventions every several miles, it’s rumored. So I think it’s important to recognize that these things take a long time to get to a high level of reliability, not making critical mistakes. Safety is a big piece of this puzzle. And then I think there’s another element that sometimes is overlooked with humanoid robots, which is that there’s still going to be a big place for robots that can do specialized tasks, the way that a car augments human capabilities, and that we can go sit in the seat, start driving, moving around, Rather than having, say, a band of 10 humanoids hoist us up on a platform and run around with us, there’s reason to believe that having these specialized systems that are designed to augment humans will have a big future as well, and that’s what we’re really excited about at Ambi Robotics.

NAT: So there’s been sort of a shift in AI talent from academia to industry, and this has been happening for a long time. Some people call it the “AI brain drain.” And I’m just wondering if you have any thoughts on if you think that’s good long term, and if this shift is good or bad for the field,

JEFF: That’s a really interesting question. I am personally excited about a lot of talent going out and trying to make an impact with the technology that is being produced right now. It’s certainly still important to have academic research and having folks who are really trying to answer fundamental questions, and not just ones that are about necessarily making money now. I do think there’s a lot of talent still staying in academia and focusing on those things. But I think today there’s a nice balance, and I think we’re actually making faster progress by having some more of this research be done at scale in industry and being tested against real world problems, which in AI robotics, we didn’t necessarily have that for things like grasping, until very recently.

Nat: With all emerging technologies, there’s always a human impact or real world impact. And then with robotics, of course, most people think about, you know, job displacement, economic disruption and all of that. So I’m just curious also to hear thoughts on in general, how do you think researchers and companies can kind of ensure that robotics automation don’t overlook those risks and impacts? Is there anything that can and should be done more or less?

JEFF: Yes, I feel strongly that folks should be designing for humans. We call it human-centric design here at Ambi, the fact that these machines can do things that previously we had humans do, it can be very scary, and it’s understandable. However, there’s a lot of things that these machines can’t do, and sometimes that’s lost in the noise of what’s happening with AI and so on. But we’ve seen in our experience that the real power of this new technology comes when it is designed for people, and the robot with people working together can do something greater than the person by themselves. We’ve trained over 500 robot operators at Ambi, and these were all people who used to be sorting packages by hand, and the feedback has been very, very positive. They can get started with the machine very quickly, in about a day of training, and now they get to work with new technology, learn about it, and they’re not hurting themselves in the process. And on top of that, we often see that these workers end up getting higher pay as well because they’re doing slightly more skilled work by working with these robots.

I think that the world is going to be most benefited by these technologies working together, and we’ll trend in that direction. And with the current administration, I think we’re kind of still largely sort of waiting to see how things like that impact robotics. There’s certainly been a lot of excitement about the economy and fundraising has continued, but I think it’s too early to really see the full impact there.

NAT: What are some of the capabilities that you’re working on that you’re most excited about?

JEFF: Yes, so Ambi Stack is certainly one of those things. It may seem sort of straightforward, and that’s actually exciting to us, because this problem of stacking items and logistics is very fundamental. This robot is actually a specialized robot. A lot of the future of robot manipulation Logistics is really about these sorts of placement problems where the robot has to orient, maybe insert an item into something, pack it into something, put it on a shelf, and so on. So this is a first step in that direction.

NAT: That’s awesome. I wonder how that looks like, you know, for somebody that doesn’t understand robotics.

JEFF: I think it may be easiest to visualize if you think again about the Port of Oakland and these cranes. It doesn’t look exactly like those, but it has this overhead structure where the robot is actually moving around largely above head height, and there’s a conveyor bringing items to the robot, and it’s picking items off of those conveyors and putting them into these containers and sorting them so it might get a scan of a barcode and decide that this item needs to go into this container, another one, into a different one. And this robot uses AI to make these sorts of decisions. And one of the cool things it can do is also decide not to place an item yet, so it can move one over to the side until there’s a better time later. For example, it may not want to put a small, lightweight item at the bottom of a stack, because it could get crushed and the whole thing could fall over later on.

NAT: What kinds of stuff should we be expecting for the next years, realistically?

JEFF: I think that one thing that is going to be important for the future is having robot hands that can orient items without losing grip of them, and be able to feel the surface of items that they’re touching and tactile sensing has made a lot of progress recently.

NAT: Tactile sensing is how robots “feel” the objects they’re touching. Until recently, most robots relied almost entirely on vision, making it hard to grip (you’d be surprised by how important it is to have hands that can tell if something is smooth, slippery, or squishy). But the new tech Jeff mentions, using sensors with tiny cameras under soft surfaces for example, is giving robots a sense of touch. It’s a big deal for tasks like folding clothes or handling delicate objects, which obviously a lot of people are interested in getting robots to do. But the question now is if robots can ever feel more holistically, like we can.

JEFF: It’s made a lot of progress, especially with some of these technologies based on, essentially, starting with the gel site technology, where there’s actually cameras looking at the surface of items, and that technology becomes more mature, more robust. I think it’s a really interesting modality. I do think there’s still a way to go in terms of being able to sense more holistically, that technology is hard to scale to something like skin around like a robot’s whole body. Not to say it can’t be done, but I think there’s a lot of interesting work there left to do. And I think that having those capabilities will allow robots to be more reactive when they are grabbing items or pushing them around or placing them somewhere, or even doing more complex things like tying knots and rope or folding items like clothing because the ability to feel those things is really, really important. There’s actually a really interesting study that was done, I don’t remember exactly when, but it was showing what happens when a person’s hand is numbed and they try to light a match, versus just lighting a match, and it takes a really long time for people to be able to do this, to light a match when they’re numb. And the reality is, most of our robots that are out there in the world commercially today are almost numb, maybe not 100% but pretty close to it. And so it’s just much harder and takes longer to learn how to do these tasks, even if it is possible to do them with vision alone.

NAT: When we think about robots and the impact that they will have on our daily lives, we usually think of humanoids, but what I’m hearing is the impact might be way less visible, let’s say, and more on, for example, warehouses and things like that. Do you think there’s going to be like a silent robot revolution that the average person would not really see?

JEFF: That’s a great question. I think to some extent that is even happening today. We’re seeing a lot more AI and robots being deployed. We are part of that, but there’s a lot of other companies doing amazing things there, and especially with mobile robots and logistics, a ton of progress has happened there in the last 10 years, and that’s continuing to go even today, and we’re in the early days of the manipulation changes there. Going back to the point about humanoids, I think humanoids do have a bright future, and I want to really make that clear. I’m not saying that they won’t be prevalent and they could do some amazing things, but I think what sometimes is lost is that this idea that humanoids will do everything and there will be no point to having specialized robots. I would say I do not believe that to be the case. Imagine, for example, automating a shipping yard too. There’s, I’m near the port of Oakland, and I think you are as well. You see those shipping cranes out there. It feels to me to make a lot more sense to automate those as robots than again, 100 humanoids trying to hoist up a. Bunch of shipping containers. So there’s these opportunities to multiply what humans can do.